Technology Assisted Review with Iterative Classification

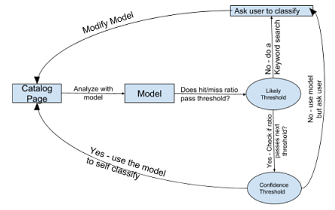

Manually extracting information from large text-oriented datasets can be impractical, and language variations in the text can defy simple keyword-driven

searches. This suggests the need for an intermediate approach that would allow for a technology assisted review of the documents. This project explores the

impact of keeping the human in the loop in the early stages of data mining efforts to help direct the outcome. The research team developed a tool to allow

users to interactively classify individual pages of a large text document and feed the results into a learning algorithm. As the algorithm's predictive accuracy

improves, it continually assumes a more informed role in selecting text segments for the human to evaluate until it is reliable enough to finish the classification

task unsupervised. We expect that the incremental training approach can efficiently identify a core set of data, and believe it shows promise in this

kind of application.

Manually extracting information from large text-oriented datasets can be impractical, and language variations in the text can defy simple keyword-driven

searches. This suggests the need for an intermediate approach that would allow for a technology assisted review of the documents. This project explores the

impact of keeping the human in the loop in the early stages of data mining efforts to help direct the outcome. The research team developed a tool to allow

users to interactively classify individual pages of a large text document and feed the results into a learning algorithm. As the algorithm's predictive accuracy

improves, it continually assumes a more informed role in selecting text segments for the human to evaluate until it is reliable enough to finish the classification

task unsupervised. We expect that the incremental training approach can efficiently identify a core set of data, and believe it shows promise in this

kind of application.

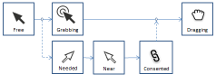

Collaboration Strategies for Drag-and-Drop Interactions with Multiple Devices

ManyMouse is a software tool that revisits the ability of multiple users to collaborate by connecting their personal independent mouse hardware to a shared computer. This tool, implemented at the system level, assigns each input device to its own simulated mouse cursor, extending the potential for collaboration to any application. ManyMouse is currently being used as a platform to explore various coordination strategies and assess their impact on learning potential. Previous work on this topic has largely focused on inferring a group’s intention from the relative positioning of the mouse pointers. This work attempts to extend those ideas to include coordination of direct actions such as drag-and-drop.

ManyMouse is a software tool that revisits the ability of multiple users to collaborate by connecting their personal independent mouse hardware to a shared computer. This tool, implemented at the system level, assigns each input device to its own simulated mouse cursor, extending the potential for collaboration to any application. ManyMouse is currently being used as a platform to explore various coordination strategies and assess their impact on learning potential. Previous work on this topic has largely focused on inferring a group’s intention from the relative positioning of the mouse pointers. This work attempts to extend those ideas to include coordination of direct actions such as drag-and-drop.

Beginning and Advanced CS for Teachers

CS4HS is an annual grant program from Google that

promotes computer science education worldwide by connecting educators to the skills and resources they need

to teach computer science.

CS4HS is an annual grant program from Google that

promotes computer science education worldwide by connecting educators to the skills and resources they need

to teach computer science.

This project invovled the design and delivery of online materials to learn about computing

concepts through the lens of Mobile App Development. Participants engaged in several small-scale app development

projects that modeled a progression of skills for their students to learn.

Materials for this course can be found at:

https://uni-cs4hs-appinventor.appspot.com/preview

Raw videos for the course are also accessible on You tube:

https://www.youtube.com/channel/UCbZR4YmCn9EYlz5D_voO-Ig/videos

Shared Display Interaction with Mobile Devices

Traditional clicker systems typically interpret responses as

data to be stored and aggregated, then visualized as a graph. This research attempts to harness the

flexibility of the smartphone to shift this approach. Instead of interpreting student input as data, student

responses can be treated as instructions, allowing students to collectively manipulate a shared display.

For this study, CICS has been expanded to support the ability to manipulate the cursor's movement using a

smartphone to simulate a remote trackpad.

Traditional clicker systems typically interpret responses as

data to be stored and aggregated, then visualized as a graph. This research attempts to harness the

flexibility of the smartphone to shift this approach. Instead of interpreting student input as data, student

responses can be treated as instructions, allowing students to collectively manipulate a shared display.

For this study, CICS has been expanded to support the ability to manipulate the cursor's movement using a

smartphone to simulate a remote trackpad.

The goal of this study was to better understand how a collaborative influence impacts the performance of

simple tasks like cursor movement and selection. To objectively measure the performance of this technique,

multiple users will be asked to participate in a series of Fitts Selection Tasks. The results suggest

that it is possible for multiple users to collaboratively move a single pointer without significant degradation

in performance.

Software Promoted Interaction in Speech-Language Therapy

Semantic Feature Analysis is a speech-language treatment that is frequently used with individuals who have

suffered strokes or brain injuries and who demonstrate difficulty accessing the words they wish to say. The

focus of this treatment, as with many kinds of speech-language therapy, is the interaction that takes place

between the clinician and the patient.

Semantic Feature Analysis is a speech-language treatment that is frequently used with individuals who have

suffered strokes or brain injuries and who demonstrate difficulty accessing the words they wish to say. The

focus of this treatment, as with many kinds of speech-language therapy, is the interaction that takes place

between the clinician and the patient.

With the rapidly expanding use of technology, many clinicians in medical settings are exploring ways to

incorporate new tools into their therapy sessions. The emergence of mobile and handheld technologies is

particularly appealing because of their collaborative potential. The ability for a patient and speech-language

pathologist to engage in Semantic Feature Analysis through a shared tablet or other shared display would not

only expand the range and accessibility of test items, it could also facilitate the record-keeping tasks of

the clinician. More importantly, as a shared display, the essential quality of the exercise - interaction

between the participants - is preserved in a way that cannot be done with a traditional desktop device.

Classroom Collaboration with Mobile Devices

One of the major perceived barriers to the adoption of Classroom Response Systems, a.k.a. "clickers",

is limited interactivity. Students using dedicated clicker hardware are often only able to provide

basic multiple choice or simple numeric responses. The accessibility and flexibility of smartphones

make them an intriguing platform for managing some of the shortfalls of traditional classroom response

systems. This approach not only leverages a resource that a growing number of students already own,

but also aims to enhance the effectiveness of the system by tapping into the robust interaction capabilities

that these devices afford.

One of the major perceived barriers to the adoption of Classroom Response Systems, a.k.a. "clickers",

is limited interactivity. Students using dedicated clicker hardware are often only able to provide

basic multiple choice or simple numeric responses. The accessibility and flexibility of smartphones

make them an intriguing platform for managing some of the shortfalls of traditional classroom response

systems. This approach not only leverages a resource that a growing number of students already own,

but also aims to enhance the effectiveness of the system by tapping into the robust interaction capabilities

that these devices afford.

Virtual Puppetry

This project explores virtual puppetry as a potential mechanism for enhancing students' presence in a virtual learning environment.

To develop this style of interaction requires substantial attention to the user interface in order to promote the operator's (puppeteer's)

situation awareness of both the real and virtual environments. This project developed of an initial prototype and identified

some of the ongoing concerns for controlling virtual puppets.

CaveRC

Large, multi-screen displays can be used to foster a sense of immersion in a virtual environment, which in turn leads to better transfer and retention of spatial information. This project seeks to isolate the underlying factors that contribute to the sense of presence that viewers feel when interacting with this type of display.

Behavioral Queries

This research explores "Behavioral Based Visual Queries" to balance the objectivity of query languages and the rapid insight afforded

through visualization. In this model, each row of a database table is represented by a visual data avatar that is programmed with

certain behaviors. Users can specify queries by using familiar interface components to introduce stimuli to a collection of avatars.

As each avatar reacts to the stimuli and other avatars, emergent behaviors can be interpreted as a query result.

This research explores "Behavioral Based Visual Queries" to balance the objectivity of query languages and the rapid insight afforded

through visualization. In this model, each row of a database table is represented by a visual data avatar that is programmed with

certain behaviors. Users can specify queries by using familiar interface components to introduce stimuli to a collection of avatars.

As each avatar reacts to the stimuli and other avatars, emergent behaviors can be interpreted as a query result.

Attentive Navigation

The ability to manipulate the viewpoint is critical to many tasks performed in virtual environments (VEs). However, viewers in an

information-rich VE are particularly susceptible to superfluous data and are easily distracted. Attentive Navigation techniques can

address this issue by allowing the viewer to explore freely while allowing the system to suggest optimal locations and orientations.

This provides a supportive yet unscripted exploration of a Virtual Environment.

The ability to manipulate the viewpoint is critical to many tasks performed in virtual environments (VEs). However, viewers in an

information-rich VE are particularly susceptible to superfluous data and are easily distracted. Attentive Navigation techniques can

address this issue by allowing the viewer to explore freely while allowing the system to suggest optimal locations and orientations.

This provides a supportive yet unscripted exploration of a Virtual Environment.